On 2022-01-19 I released an improved version of Text::Levenshtein::BV. BV means Bit Vector, because it uses a bit-parallel algorithm with runtime complexity of O(ceil(m/w)*n), where w is the width of a CPU register. It's 20 times faster than the popular Text::Levenshtein using the traditional or simple algorithm with O(n*n) on strings with length 10.

Last summer I developed a variant with the simple algorithm named Levenshtein::Simple, because working on arrays is more flexible than strings. A string is easy to split into an array of characters. The same module could be used for arrays of characters, graphemes, words, lines.

Another reason is, that the Levenshtein alignment (shortest edit script) is more important than just the distance. Most modules on CPAN calculate only the distance for similarity, which is easier and faster with LCS.

It was a surprise that my Levenshtein::Simple without tuning was 50% faster than Text::Levenshtein. During development of an XS implementation I tuned Levenshtein::Simple to beat Text::Fuzzy::PP.

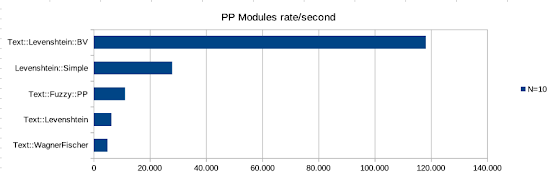

These are the results for the pure Perl (PP) implementations measured in Rate per second:

N=10 Text::WagnerFischer 4,931 Text::Levenshtein 6,339 Text::Fuzzy::PP 11,164 Levenshtein::Simple 27,926 Text::Levenshtein::BV 118,153

There are many XS versions on CPAN for calculating Levenshtein distance. Only three of them work without problems. They all use the simple algorithm. Text::Levenshtein::Flexible uses the C implementation of PostgreSQL, but has tricky code. Perl strings at the XS interface can be bytes or UTF-8. In C UTF-8 can be processed by iterating over UTF-8 or convert UTF-8 to 32-bit integers (numerical code points). Working with integers including the conversion is faster.

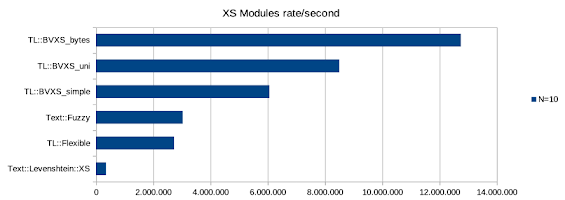

Measured via Perl the benchmarks are (TL::BVXS is mine, simple is my implementation just for fun, uni is with Unicode strings, bytes with bytes):

N=10 Text::Levenshtein::XS 347,539 TL::Flexible 2,725,258 Text::Fuzzy 3,026,401 TL::BVXS_simple 6,056,132 TL::BVXS_uni 8,495,407 TL::BVXS_bytes 12,743,110

Optimising for fun.